As the title suggested after many years working in large enterprises, services providers and as of recently partners I am headed to a network vendor. This is a large change for me but a very good one. I am tremendously excited for the opportunity. I am joining Arista for multiple reasons. I was able to […]

As the title suggested after many years working in large enterprises, services providers and as of recently partners I am headed to a network vendor. This is a large change for me but a very good one. I am tremendously excited for the opportunity.

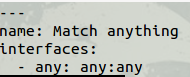

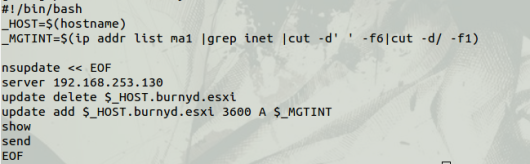

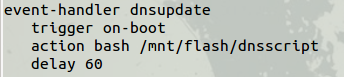

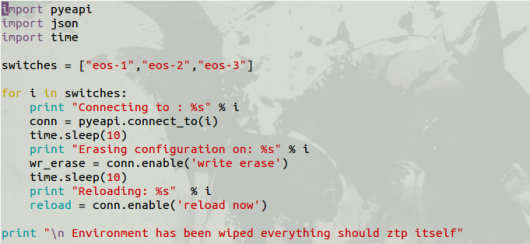

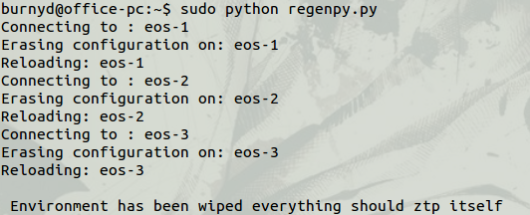

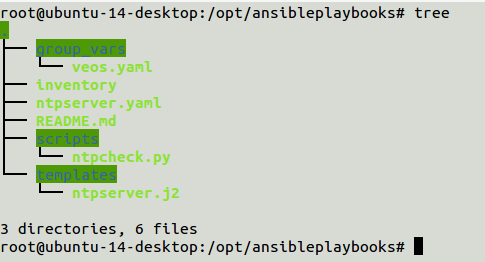

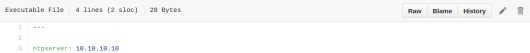

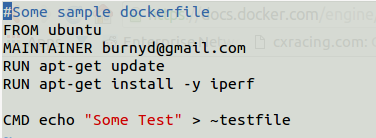

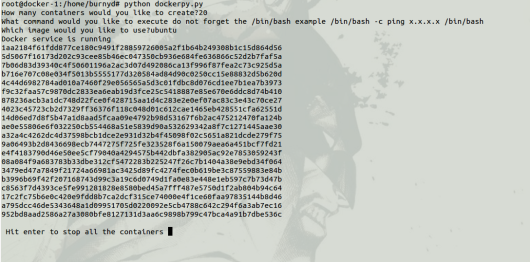

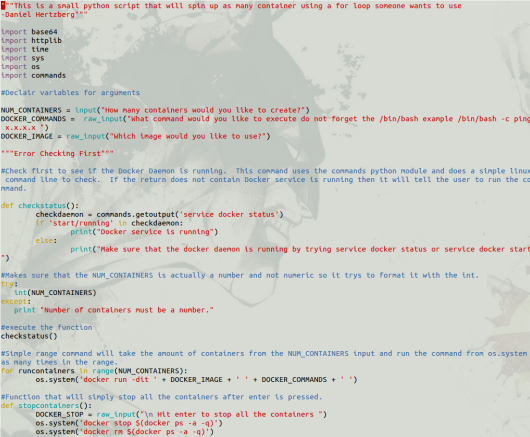

I am joining Arista for multiple reasons. I was able to bring in Arista at a prior position and watch the entire network change in a drastic fashion. We were able to automate many tasks that were previous manual. It seems like every time I turn around Arista has integration into multiple different technologies ie docker,openstack,golang etc.

I was fortune enough to attend tech field day last year where Ken Duda CTO of Arista networks delivered one of the most awesome presentations on the evolution of EOS and code quality.

Everyone I have met within Arista has been amazing. This was an easy decision given the talent and passion of every individual I have met within the organization. It fits my career goals of moving towards SDN, continuous integration/continuous delivery and all things automation. I start late August.

Source: A new journey with Arista